A few years ago, AI in healthcare was mostly PowerPoints and promises. By 2026, it’s becoming a reality — and increasingly routine. Two-thirds of U.S. health systems are now using or actively exploring AI tools. That’s not just pilots or proof-of-concepts, but integration into core workflows, from charting to staffing to diagnostics.

But the shift isn’t smooth. It’s stratified.

Large, urban systems with strong infrastructure and dedicated innovation teams are moving fast — embedding AI into clinical and operational layers. But smaller and rural providers are still stuck trying to figure out how to implement basic automation, let alone train staff on algorithmic triage tools.

This is the tension at the heart of AI in healthcare today: a moment of real momentum, tempered by wildly uneven adoption. The promise is still there. But now it’s being filtered through staffing shortages, cost pressures, clinician burnout, and massive variation in system capacity.

This piece gives you a grounded look at what’s driving AI adoption, where it’s actually working, what’s still falling short, and what it’ll take to scale this technology responsibly across the entire care ecosystem.

Clinical Adoption Is Moving From Pilots to Practice

AI is showing up in clinical care, but not in the sweeping, diagnostic-overhaul way it’s often sold. Instead, adoption is happening in pockets, with varying levels of success depending on the setting, workflow, and staff buy-in.

Radiology is the most saturated use case.

In one study, about 90% of health systems had deployed AI in imaging and radiology in at least some areas, making it the most common use case, and nearly half of those had fully implemented it. But high adoption doesn’t always mean high impact. Many clinicians still see AI here as a second set of eyes, not a decision-maker — and in most cases, it hasn’t dramatically changed clinical outcomes.

Surgery is another growing zone.

Robotic assistance has been around for years, but new AI-powered systems are making subtle adjustments mid-procedure to reduce complications or shorten operative time. The results are promising — particularly in high-volume orthopedic and general surgery programs — but still highly dependent on surgical team integration and training.

Personalized treatment planning is gaining real traction.

Oncology and chronic disease care teams are starting to use AI models that synthesize patient data, clinical guidelines, and genomic info to suggest individualized regimens. These tools don’t override physicians, they offer a starting point that’s more tailored and faster than manual chart review.

Risk prediction tools are increasingly embedded into care workflows.

This is especially true for common but high-stakes conditions like sepsis or situations that lead to hospital readmission. In one study, 67% of health systems had deployed AI for early detection of sepsis, though success has been mixed. When they’re accurate, they buy critical time. When they’re noisy, clinicians learn to ignore them.

The takeaway here isn’t that AI is replacing clinical judgment. It’s that the most effective tools support it — by surfacing the right information at the right time, without demanding total trust or total control.

Operational AI Is Transforming the Hospital Back Office

If clinical AI gets the headlines, operational AI is where most of the traction is quietly happening. These tools may not be sexy, but they’re solving the problems that drain time, money, and attention every day.

Revenue cycle is the most active zone. About 46% of hospitals are using AI in their RCM operations to improve billing accuracy — helping scrub claims, detect clinical documentation gaps, and reduce denials. Full automation is still rare, but early results show improvements in both speed and revenue capture — especially for systems with complex payer mixes.

Staffing and scheduling are starting to shift. AI-powered workforce planning tools are being used to forecast patient volume and optimize shift assignments, particularly in large systems. They’re not perfect, but they reduce overstaffing and last-minute scrambles. In some hospitals, AI chatbots are handling schedule changes, PTO requests, and low-stakes HR queries. This lightens the load on overextended nurse managers.

Supply chain and operations are another frontier. Some systems now use AI to monitor inventory, predict shortages, and optimize OR scheduling. And according to a study, others are automating “in-basket” work — triaging messages, routing lab results, or escalating follow-up requests. These tools don’t transform care, but they help keep the system from stalling.

To summarize:

- Billing: More accurate claims, fewer denials, faster payments

- Staffing: Predictive models reduce coverage gaps and overtime

- Operations: AI tools smooth logistics, freeing up human focus

The thread connecting all of this is that AI is moving from isolated tasks to system-wide infrastructure. Not flashy. Just functional.

Patient Engagement Is Starting to Feel the Impact of AI

This is where the gap between potential and reality is still wide, but narrowing fast.

Chatbots and virtual assistants are beginning to play a bigger role in how patients interact with their health systems. As of 2025, about 19% of practices use AI-powered tools to handle appointment scheduling, answer frequently asked questions, and route messages. Adoption is still in the minority, but growth is accelerating, driven by patient demand for faster, more responsive communication.

Personalized health content is also gaining traction. Roughly 10% of physicians now use AI tools to deliver tailored recommendations — reminders, educational content, follow-up instructions — based on patient history and behavior. It’s not transformative, but it’s meaningful. Patients are more likely to engage with content that feels relevant, and systems are starting to see improved adherence and satisfaction scores as a result.

Remote monitoring is where the scale is already here. By 2024, about 30 million Americans were using remote patient monitoring devices — from glucose sensors and blood pressure cuffs to wearables — and AI is quietly helping make that firehose of data usable. Instead of flooding clinicians with raw numbers, AI helps filter and flag the outliers, surfacing signals that matter and suppressing noise.

Where this works best is in tight feedback loops:

- The AI flags a change.

- A nurse or coordinator reviews it.

- The patient hears from a human — fast.

That last part is key. Patients still want — and need — human connection. For AI to work in engagement, it shouldn’t talk just at them. It should make real connection easier, faster, and more consistent.

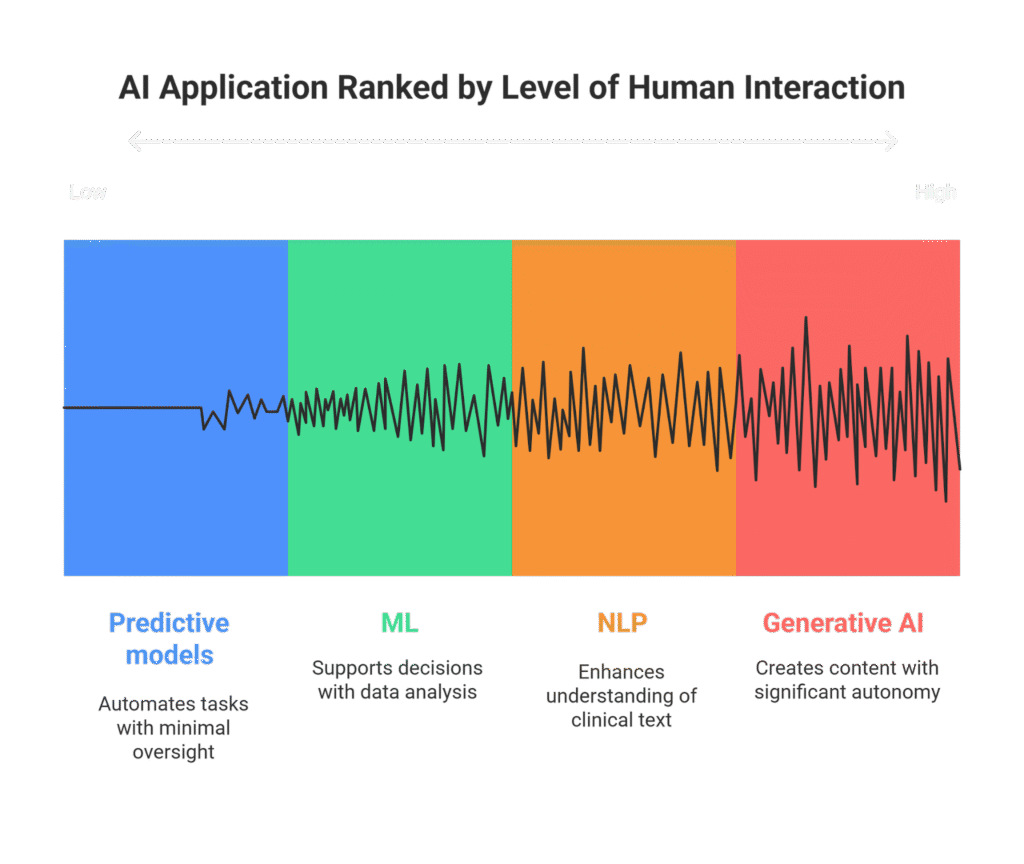

The Technologies Behind Healthcare AI Adoption

The surge in healthcare AI isn’t driven by a single breakthrough — it’s the result of several technologies maturing at the same time, each unlocking specific, practical use cases.

Generative AI is leading adoption velocity.

These tools are reshaping clinical documentation, powering chatbots, and generating tailored patient instructions. In many health systems, ambient AI scribes are the first real-world application of generative models — tools that listen during a visit and auto-generate clinical notes. They don’t just save time; they reduce burnout. And they’re often the gateway to broader adoption.

Epic’s recent partnership with Microsoft to roll out an AI-powered clinical scribe highlights how quickly these tools are moving into mainstream workflows.

Machine learning is powering deeper pattern recognition.

Used in diagnostics, image analysis, and predictive modeling, ML algorithms help flag anomalies, suggest various pathways, and identify at-risk patients. Radiology and pathology are still the most common domains, but ML is also being used in population health to stratify risk and identify gaps in care.

Natural language processing (NLP) is unlocking “dark data.”

Much of what lives in EHRs is still unstructured — free-text notes, scanned PDFs, referral letters. NLP tools help systems parse and interpret that mess, making it searchable and usable. It’s also used in real-time for transcription, language translation, and triage support in digital front doors.

Predictive analytics is becoming operational glue.

AI models forecast staffing needs, project patient flow, and predict readmissions. These tools don’t just help you respond — they let you anticipate. And while they’re not foolproof, they’re improving fast as more data gets funneled into them.

Together, they form the new backend of modern healthcare. Most patients won’t see them — but they’ll feel the difference.

The Biggest Hurdles for AI Adoption in Health Systems in 2025

For all the progress, AI adoption in healthcare is still full of friction — and the cracks are becoming harder to ignore.

Adoption remains uneven.

Larger, urban systems are leading the charge, equipped with the funding, data infrastructure, and specialized teams to pilot and scale AI tools. But rural and smaller hospitals are often left watching from the sidelines, constrained by budget, bandwidth, or outdated tech stacks. The risk is a widening divide, where AI becomes another layer of inequality in care access.

ROI is still a question mark in key areas.

While tools like ambient documentation and billing automation show clear time savings, many clinical AI models — particularly for risk prediction — haven’t yet proven consistent returns. Health system CFOs are right to be skeptical. In a climate of tight margins, even a promising tool needs to show its worth quickly and clearly.

Bias and safety concerns are slowing things down.

No one wants to be the headline about an AI-driven misdiagnosis. Hospitals are rightly cautious about deploying black-box models, especially when explainability is limited. Compliance teams are demanding transparency, auditability, and human override options — and in some cases, slowing or halting rollouts until those boxes are checked.

The costs are real.

Even when the tech itself is affordable, the cost of implementation — training, integration, oversight — is significant. And ongoing monitoring isn’t optional. AI isn’t a one-time deployment. It’s a living system that needs constant tuning.

Workforce buy-in is still a hurdle.

Some clinicians are curious. Others are skeptical — or outright resistant. Without thoughtful onboarding and clear benefits, AI tools can feel like just another layer of complexity. Change fatigue is real, and it’s one of the biggest threats to long-term adoption.

None of this makes AI a bad bet. But it does make clear: without investment in governance, equity, and culture, even the best tools won’t get far.

What’s Next for AI in Hospitals

The AI landscape in healthcare is shifting — from isolated pilots to embedded systems, from experiments to infrastructure. And the next phase won’t be louder. It’ll be quieter, more focused, and harder to notice — unless you know what to look for.

Ambient AI is poised to scale.

Tools that passively support clinical workflows — like ambient documentation and smart in-basket routing — are earning clinician trust because they reduce friction without demanding attention. Adoption will grow as models improve, EHR integration deepens, and documentation continues to dominate physician time.

Operational AI will become standard.

Scheduling, staffing, logistics, and billing are now seen as areas where AI should be used. The systems that succeed will treat these tools as part of the enterprise stack, not as vendor experiments. The real shift isn’t in the headlines, but in how teams describe what’s working on the ground.

Patient-facing AI will move past chatbots.

Expect more personalized, AI-generated content tied to specific conditions, care plans, and behaviors. The best tools won’t replace human touchpoints — they’ll make them more targeted, timely, and effective.

But three things will determine whether this next phase sticks:

- Governance – Systems that build clear policies, oversight, and vendor requirements will avoid most of the noise and risk.

- Equity – Tools that work only for the well-connected or well-resourced will face growing scrutiny.

- Scale – Pilots don’t change systems. Platforms do. Leaders will invest in scaling what works, not endlessly testing what might.

The coming years aren’t about new inventions. They’re about making the current stack reliable, trusted, and scalable.

AI in Healthcare Is Real but Uneven

The real shift coming in 2026 isn’t volume. It’s intent. Health systems are getting more selective, more grounded, and more focused on tools that actually work in the mess of clinical and operational reality.

Progress won’t come from scale alone. It’ll come from clarity — on where AI adds value, where it doesn’t, and how to support the people doing the work.